Experiment: Privacy Preserving Use of LLMs via LMStudio

Open-source LLMs with a slick chat interface. Most importantly, no sending of data to big tech.

Disclaimer: I am not paid by LMStudio or its affiliated companies. In fact I am not paid to write at all (I know, kind of sad…). This post records my experience of using LMStudio but may not be a rigorous test/review of all its functionalities/caabilities.

Large Language Models (LLMs) are changing the way people interact with information in an unprecedented fashion. The most famous example being ChatGPT by OpenAI. The ability to interact with information using natural language, to simply ask and get answers without having to learn an additional interface (keyboard, phone screen, website search bar) is almost magical at first contact.

This is also why big Tech like Google and Microsoft have both tried to capitalise on this by releasing their own LLMs. Countless startups have also sprung up in this Cambrian explosion of LLM-related innovation. Although the technology still has its problems such as hallucination and being extremely power hungry, innovation in AI and engineering is continually coming up with solutions to those challenges. It is quite clear, at least to me, that LLMs, or Generative AI in general, will have a huge impact in the way we live and work in the long run.

However, one thing still bothers me. And that is a lot of the mainstream LLMs (ChatGPT or Gemini) are developed and hosted by big tech on their servers. Which means that everytime we interact with one of the big tech LLMs, we are sending data to the big tech servers. Regardless of what the companies say about protecting user data, I still feel that we should not just leave it to the big tech companies to protect our privacy. Especially as LLMs evolve to become part of a digital personal assistant that is with us 24/7 and knows every thing about our daily lives. Just think about how Google knows the whereabouts of all the people using Google Maps. Think about the power it gives Google.

The open-source community has risen up to this challenge and has released a plethora of very capable LLMs that are free to use. An example is Llama, almost ironically released by Meta. And recently, I came across LMStudio, an application that supports Windows (yuck), Mac and Linux (wooohoo!) and allows people to easily download open source LLMs for use locally, without sending data out of their computers.

So I decided to give it a go and share my experience here.

Sorry for the long intro. Let’s get on with the show.

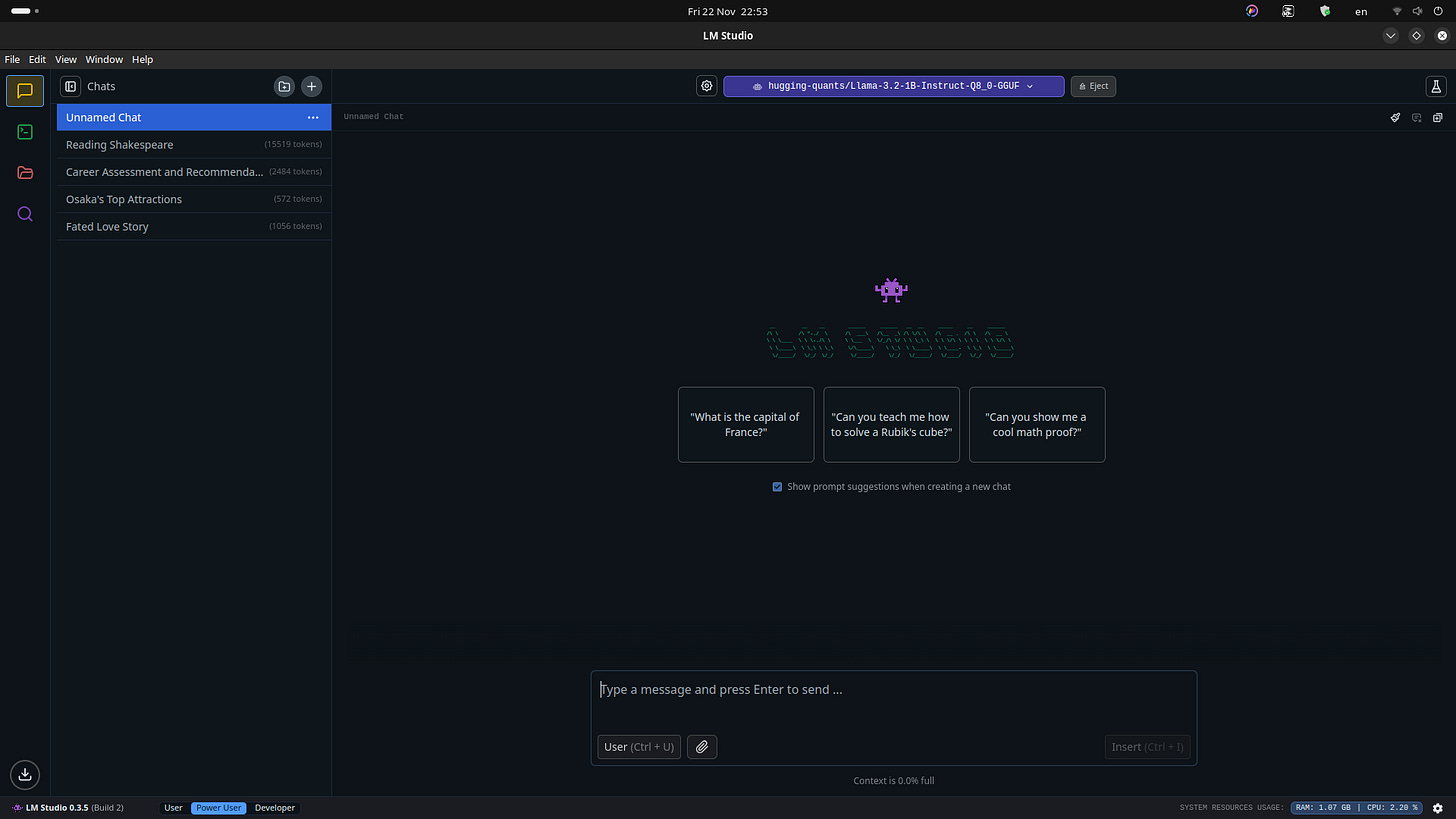

First impressions

The user interace is very slick. And very familiar to any one who has used ChatGPT before. For those using Ubuntu, the app binary is in .AppImage format. If you find that you can’t run it by double-clicking on the binary, follow the instructions here to install fuse.

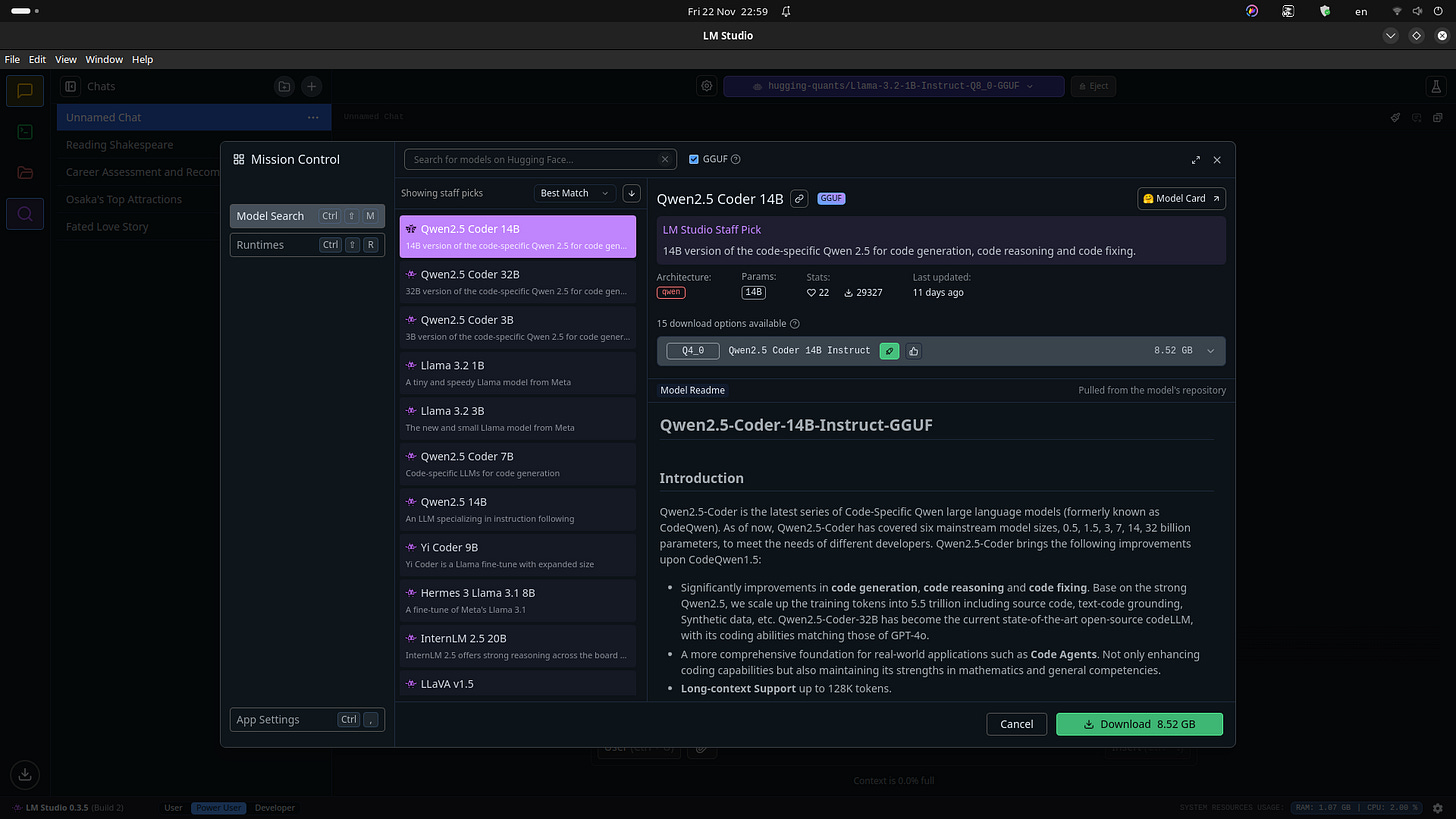

Model download

This application even makes model download easy. Simply click on the magnifying glass on the left sidebar and you can select from a range of open-source models from HuggingFace.

For this experiment, I chose the Llama 3.2 1B model that is 8-bit quantized. LMStudio uses llama.cpp as its underlying runtime which means it can run models in the gguf format and it does it really fast. Also, as the LLM community pushes performance limits, smaller models have actually become very capable for many tasks.

I will be running most of the examples below (save for the one where I run it on my 4 year old laptop) using my workstation that comes with an Nvidia RTX A4500 GPU. It automatically detected my GPU and used it. No fuss.

Test 1: Simple conversation

In this test, I simply conversed with the model by asking it a hypothetical question about Romeo and Juliet. In this case, the model is relying on its built-in knowledge. Note here that I am not evaluating the model performance, just the interface.

See a short recording of the interaction below. The speed you see is the actual speed of generation. I did not speed things up.

Here’s an excerpt of the answer given to the question, “Do you think Romeo and Juliet would end up together if their families weren't at war with each other?”

While the story as we know it is set in a time of conflict between the Montagues and Capulets, I think it's possible to imagine a scenario where Romeo and Juliet might still end up together even without the feud between their families.

In fact, there are many adaptations and reinterpretations of Romeo and Juliet that explore alternative scenarios, such as:

Romeo and Juliet 2.0: In this version, the families are not at war with each other, but they're more in harmony and respect each other's differences. The story explores how their families would adjust to living together peacefully.

A utopian Romeo and Juliet: This interpretation takes place in a futuristic or alternate universe where the feud between the families is non-existent. In this world, Romeo and Juliet could still fall in love without any obstacles.

Romeo and Juliet: A historical reimagining: This version might take place in ancient Rome, where the social norms are different from our own. Perhaps the families would have more flexible views on relationships and love, allowing for a peaceful coexistence between them.

Even in these alternate scenarios, I think it's possible to imagine that Romeo and Juliet could still end up together if they chose to be in a relationship rather than being forced into one by societal pressures or family expectations. After all, their love is strong and true, and they're willing to overcome obstacles to be with each other.

…

Ultimately, the story of Romeo and Juliet is timeless, and its themes of love, passion, and loyalty continue to resonate with audiences today. While it's impossible to know for certain what would happen if their families weren't at war, I think it's possible that their love could thrive even without the obstacles they faced in the original version.

Test 2: Ask for up-to-date facts

In this test, I asked for the the tourist attractions in Osaka as well as their websites. The prompt is as follows:

What are the famous tourist attractions in Osaka? Give me the links to their websites as well.

Here’s the response:

Osaka is a vibrant city with a rich cultural heritage, offering many exciting tourist attractions for visitors. Here are some of the most famous ones:

Traditional Attractions:

Osaka Castle (http://www.osakacastle.jp/)

A 150-year-old castle that played a significant role in Japan's unification and is now a UNESCO World Heritage Site.

Dotonbori (http://dotonbori.info)

A famous entertainment district known for its neon lights, street food, and unique attractions like the Glico Man sign.

Umeda Sky Building (http://www.umedaskybuilding.jp/en/index.php)

The tallest building in Osaka, offering great views of the city from its observation deck.

Food and Drink:

Tsutenkaku Tower (http://www.tsutenkakutower.jp/)

A 150-foot-tall tower with a large statue of the sun goddess Amaterasu, offering panoramic views of Osaka.

Dotonbori Riverwalk (http://www.dotenboririverwalk.com/)

A scenic walking path along the Dotonbori River, featuring street food stalls and shops selling local specialties like takoyaki and okonomiyaki.

Museums:

Osaka Museum of History (http://museum.osaka.jp/eng/index.php)

A museum showcasing Osaka's history, from prehistoric times to the present day.

National Museum of Art, Osaka (http://www.mhokuboku.go.jp/eng/)

A world-class art museum featuring over 6,000 works of art.

Other Attractions:

Universal Studios Japan (http://usj-osaka.en.universal-studios.com/en/)

An amusement park based on popular movies and TV shows like Harry Potter, Jurassic Park, and The Fast and the Furious.

Osaka Aquarium Kaiyukan (http://www.osakaoakari.kaiyukan.jp/en/index.php)

A large aquarium featuring a variety of marine life from around the world.

Please note that some attractions may have restricted access due to maintenance or special events, so it's always best to check their websites before visiting. Additionally, Osaka is a bustling city with many other interesting attractions and experiences to explore beyond these famous ones!

This is where the lack of an agentic approach where the LLM can invoke functions to crawl up-to-date information prevents an accurate response. For example, the National Museum of Art, Osaka, has more than 8000 art pieces, not 6000. Also you would find that the URLs are hallucinations.

For thos not familiar with LLMs, all this is part and parcel of using LLMs and techniques like proper prompt engineering and retrieval augmented generation (RAG) have come a long way in overcoming such drawbacks.

Also, I’m using a very small model (by today’s standards), a larger model might work better but would not be a fair comparison when I try to run LMStudio on my old laptop later.

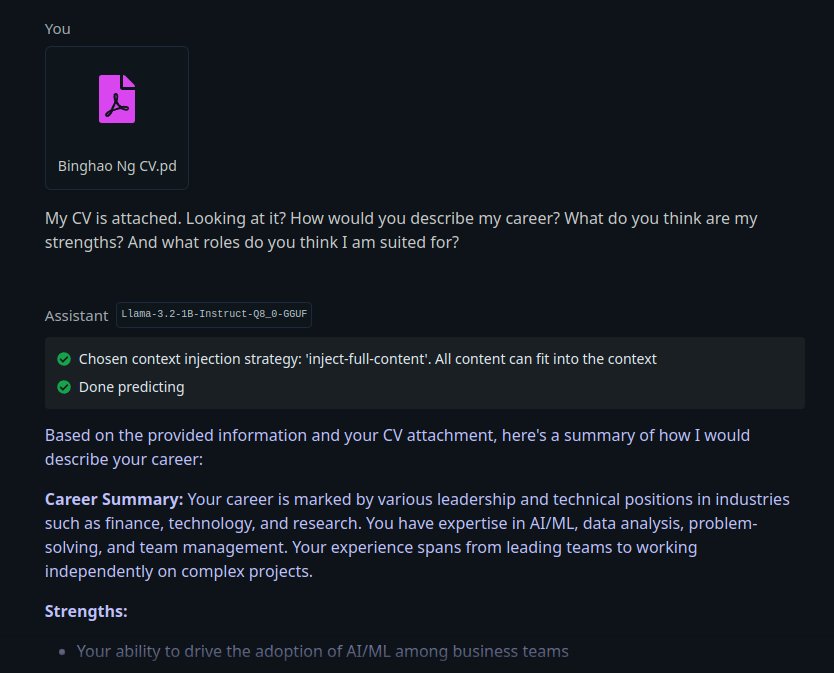

Test 3: Read a document

In this test, I uploaded my CV in PDF (yes, LMStudio supports document uploads) and asked the LLM the following question,

My CV is attached. Looking at it? How would you describe my career? What do you think are my strengths? And what roles do you think I am suited for?

If the document can fit into the context window, LMStudio will parse it and place it in the LLM context.

Pretty cool…

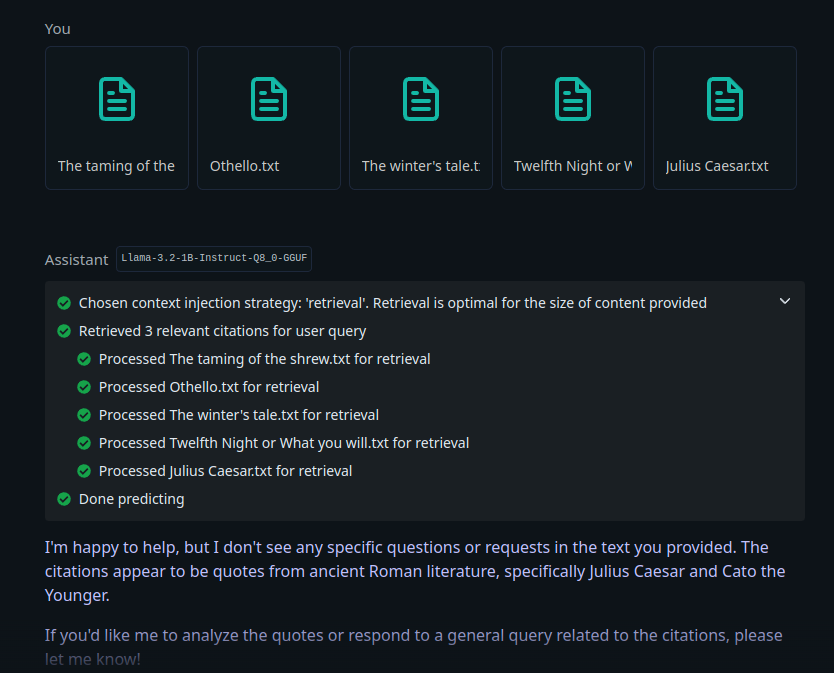

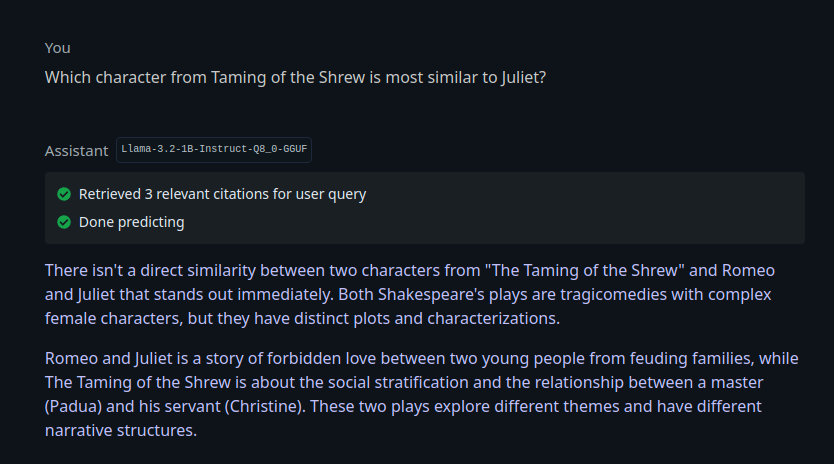

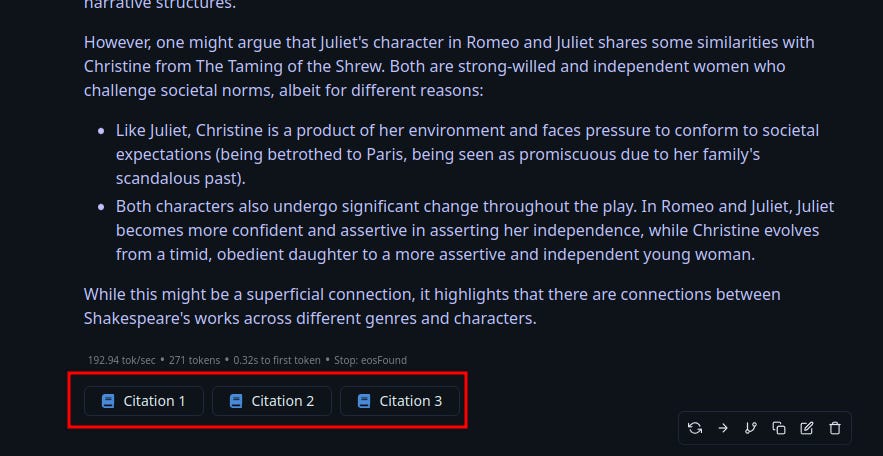

Test 4: RAG. Yes, RAG.

LMStudio also supports Retrieval-augmented Generation (RAG). This means that you can upload large amounts of text that don’t fit into an LLM’s context window and have the LLM look up and summarise information from all the text data you upload.

To test this, I uploaded 13 of Shakespeare’s plays that I downloaded from the Gutenberg Project.

The thing is, LMStudio only supports uploading 5 documents at a time. So I had to batch my uploads. But once I’m done, I can ask questions across the stories I’ve uploaded.

It even gives me the citations.

Final test: Running it on my 4 year old laptop

So now I’m running it on my 4 year old laptop (Lenovo X13 Gen1, Ryzen 7, 16GB RAM). This is to test out how it feels like on a developer laptop rather than a workstation.

As you can see in the screen recording below, the generation is a lot slower. 8.24 tokens/sec versus 200+ tokens/sec on my workstation. But the over feel is still OK, given the light-weight Llama3 1b model. Not too bad for a 4 year old laptop.

Of course, it might struggle with larger models. But with lighter models getting more capable each day, I’m staying hopeful.

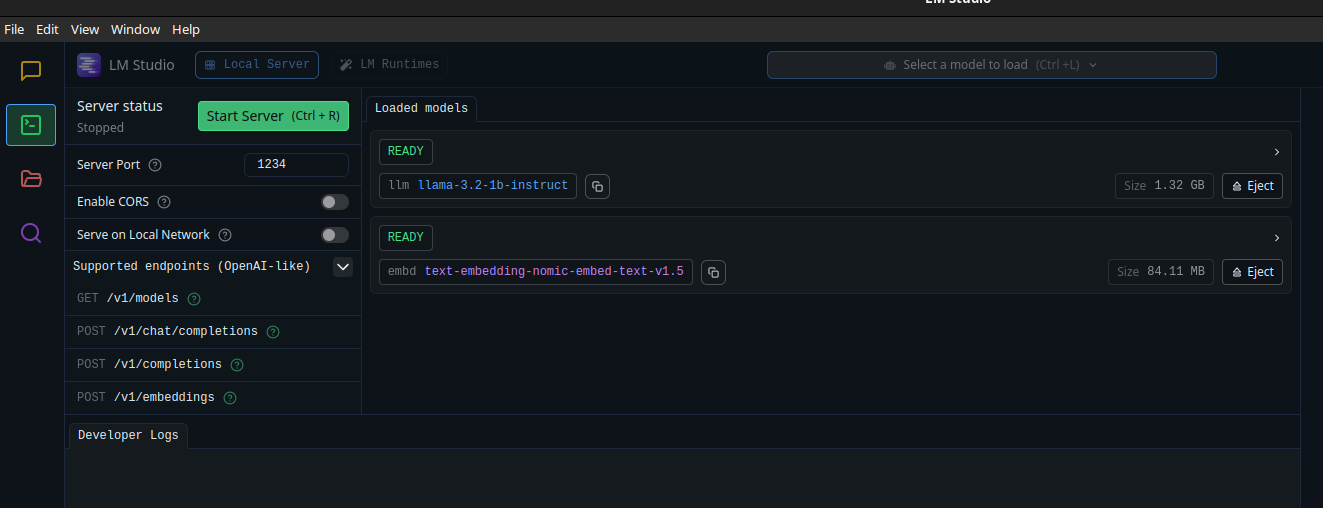

Other functionalities

An interesting thing I found is that LMStudio also supports serving out LLMs via an OpenAI-like REST API. I didn’t fully explore this. But it’s interesting.

Final thoughts

The entire experience of using LMStudio was extremely pleasant. The user interface was slick. Model download was seamless. Runtime was fast thanks to llama.cpp.

The setup was also painfree. I didn’t have to compile anything. I didn’t have to setup llama.cpp on my own, host a REST API and then code up a half-assed interface using Gradio (not that Gradio itself is half-assed, just my UI skills are not great). Everything just worked.

More importantly, none of the data left my workstation.

True, the fact that I can’t upload hundreds of documents can be a pain. And the lack of agentic approach can limit the use cases to summarisation, re-writing or ideation. But that’s OK. I mostly use LLMs at this moment for those use cases anyway. Maybe LLM agents that can query websites can be made available on LMStudio. Most people use LLMs in the chat format anyway.

On top of that, I have the whole open-source ecosystem to draw on. So for example, I can use a coding specific LLM to answer my coding questions. Maybe there will be a medical LLM to which I can direct my healthcare questions or ask to summarise my health check reports.

All-in-all, I’m very impressed with LMStudio and will continue to incorporate it into my daily work.